Integration

Novita AI & Portkey Integration Guide

Streamline AI development by using Portkey AI Gateway with Novita AI for fast, secure, and reliable performance.

Portkey AI Gateway transforms how developers work with AI models like Novita AI, providing a unified interface for seamless access to multiple language models with fast, secure, and reliable routing. This integration simplifies AI development and improves application performance.

This guide will walk you through setting up Portkey AI Gateway and then integrating Novita AI API with Portkey.

After the gateway starts, two key URLs will be displayed:

Next, execute the following Python code to send your first request:

Effortlessly monitor all your local logs in one centralized location using the Gateway Console at:

Python

Python SDK

Python SDK

Python

Python SDK

Python SDK

How to Set Up Portkey AI Gateway

Setting up Portkey AI Gateway is simple and efficient, requiring just three key steps: configuring the gateway, sending your first request, and optimizing routing and guardrails for seamless performance.Step 1: Setup your AI Gateway

To run the gateway locally, ensure Node.js and npm are installed on your system. Once ready, execute the following command:-

The Gateway:

http://localhost:8787/v1 -

The Gateway Console:

http://localhost:8787/public/

Step 2: Make your first request

Begin by installing the Portkey AI Python library:http://localhost:8787/public/.

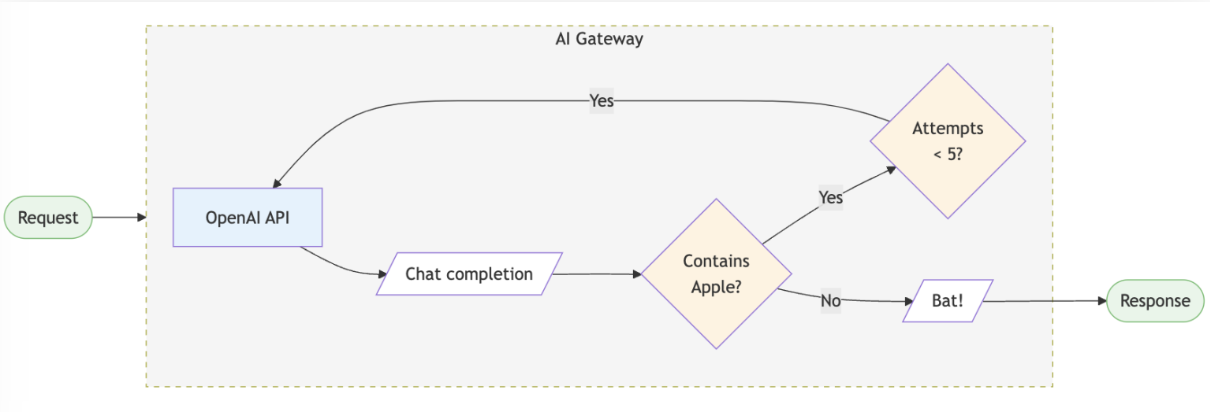

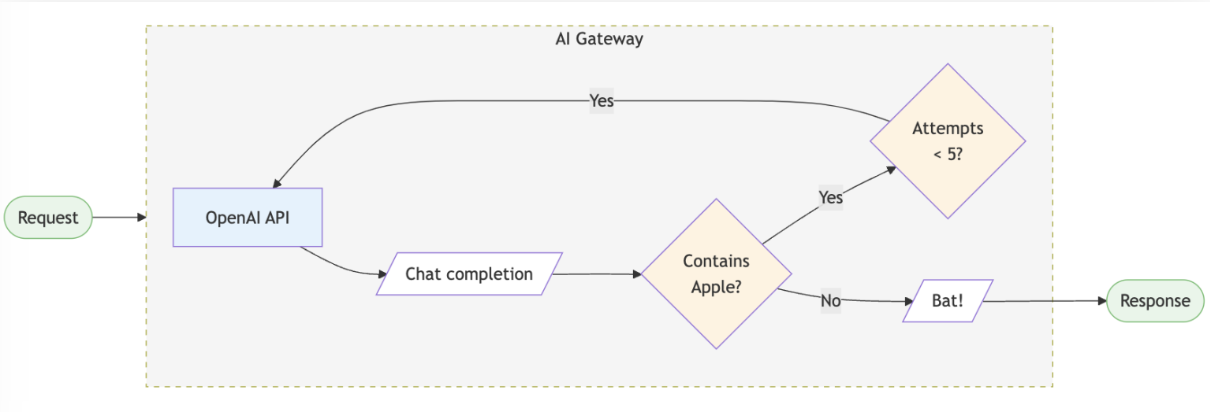

Step 3: Routing & Guardrails

Portkey AI Gateway enables you to configure routing rules, add reliability features, and enforce guardrails. Below is an example configuration: